So I rebuilt Vercel...

Recently, I've been looking for a challenge in the web world out of boredom. While working on my personal site, a curious thought popped into my head - How does Vercel work? I mean, someone had to actually go build the platform I use to build my projects. That thought sparked off hours of research and wrangling infrastructure.

So... I made Zercel. It's like Vercel. But Mine. It doesn't have all the bells and whistles that Vercel provides, but it's a functional CI/CD platform that can deploy your sites ✨ automagically ✨.

Vercel? What's that?

Vercel is a platform that allows you to deploy your web projects with ease. It's a CI/CD platform that can deploy static sites, server sites, and APIs. It's a great tool for developers who want to focus on building their projects rather than worrying about the infrastructure. I use Vercel for a lot of my projects - it just works and keeps me from worrying about the nitty-gritty details of deployment.

But how does Vercel work?

Here's a general breakdown:

- You push your code to a git repository.

- Vercel listens for changes in your repo.

- When it detects a change, it runs the

npm build(or yarn/pnpm etc) script in your repo. - It takes the files generated by the build script and serves them (or runs a server if you have a Next.js app or an API).

- It handles all the routing, caching, and scaling for you.

It's a magical experience to go from code -> link in under 5 minutes. But behind the scenes, there's a lot of complexity involved in making a platform like Vercel work. You have to worry about security, scaling, versioning, costs, and user experience while juggling all the moving parts. I'm not going to rebuild everything, but the core features of Vercel are what I was aiming for.

Building Zercel

Planning

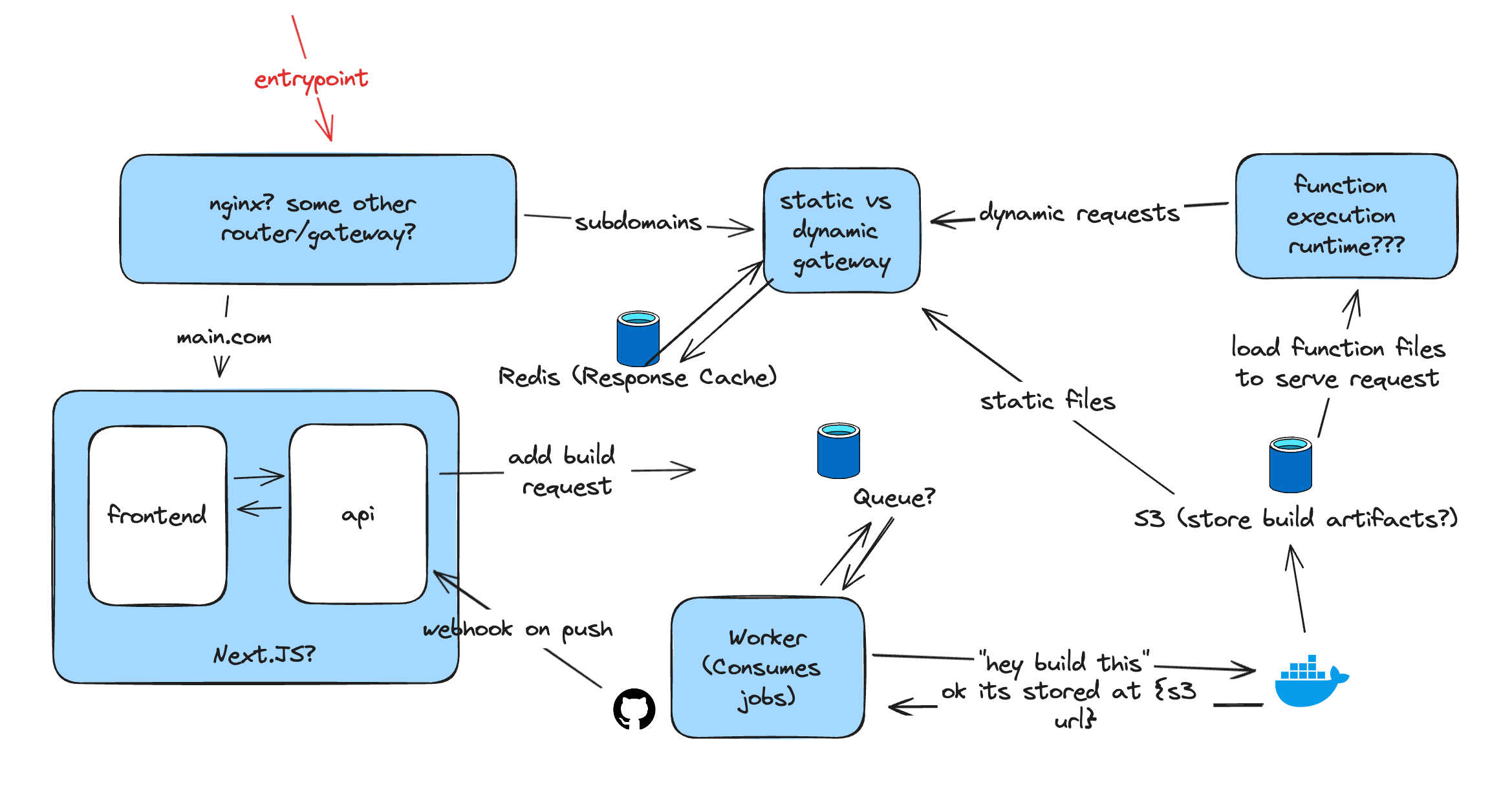

I tried to plan out how I would build Zercel. For a cloud platform, I chose GCP because I’m familiar with it and wanted to get started quickly.

I thought about using a Next.js app with some kind of task queue to manage site builds. The queue would feed into a build job runner that would handle the build process and deploy the site to a storage bucket. I planned to use something like Redis for queuing, another Redis instance for response caching, and store artifacts in a bucket. Then, I’d use Nginx or some API gateway to tie everything together.

I missed a lot of things

Turns out, I was both overcomplicating and underestimating the complexity of the project. Since I don’t have a ton of experience with this, I didn’t fully realize all the moving parts involved. Some major things I initially overlooked:

- Isolated build environments

- Server-rendered sites can't be stored in a bucket - you need a server

- No good way to build Docker images in a container

- I don’t even need an event queue - my build services already have a pseudo-queue built-in

- Streaming (build and runtime) logs

- (...and probably more that I haven't realized yet)

Let's jump into building.

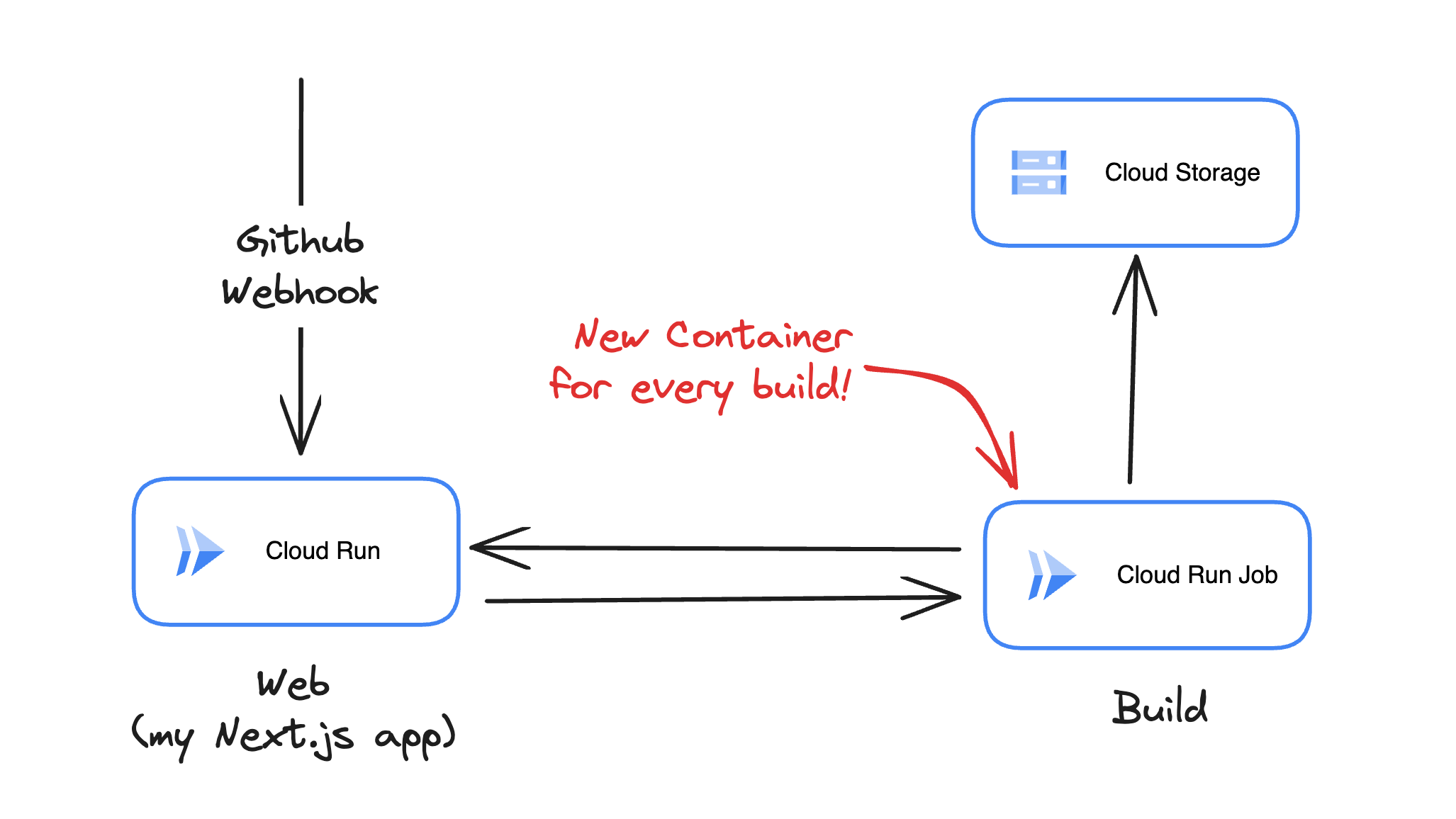

Static sites

Building static sites is surprisingly easy. Whenever a new commit is pushed to a repository, Github sends a webhook to Zercel. Zercel checks if the commit is relevant (based on the repo, branch and path) and if it is, it creates a new build job. The job spins up a container, installs dependencies, does the build, and stores the build result files in a folder within a bucket.

To keep track of different versions, I use the commit SHA - a unique hash generated by Git for every commit - as the folder name. Since SHAs are cryptographic hashes, collisions are virtually impossible, ensuring each deployment is reliably versioned.

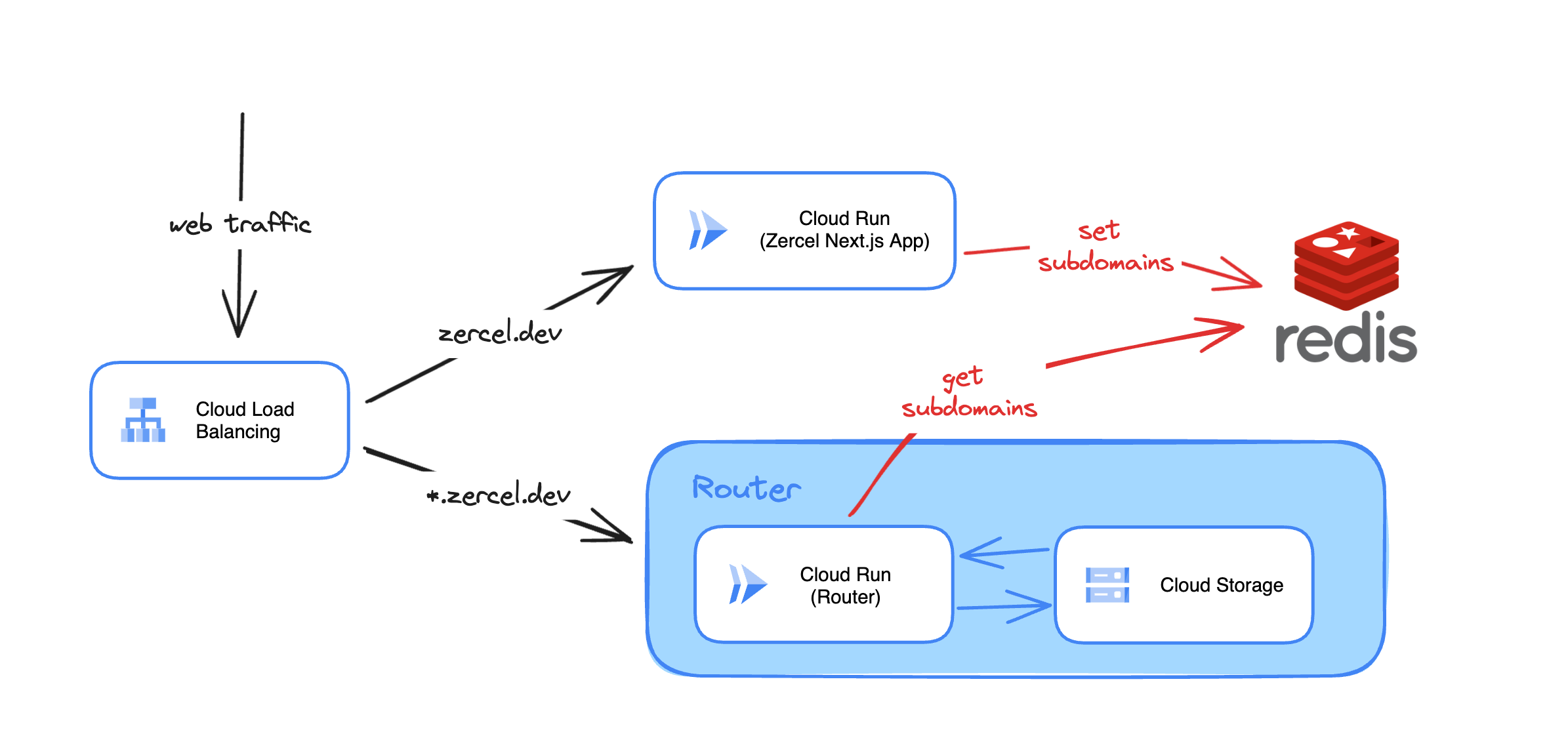

To host static sites, all I have to do is serve these files over HTTP. I built a simple router using Hono that maps subdomains to commit SHAs using a dictionary. If the SHA exists as a folder in the bucket, it serves the files. If not, it returns a 404. It's a simple setup that works well for static sites. Once I confirmed it worked locally, I tried deploying to GCP behind my domain zercel.dev

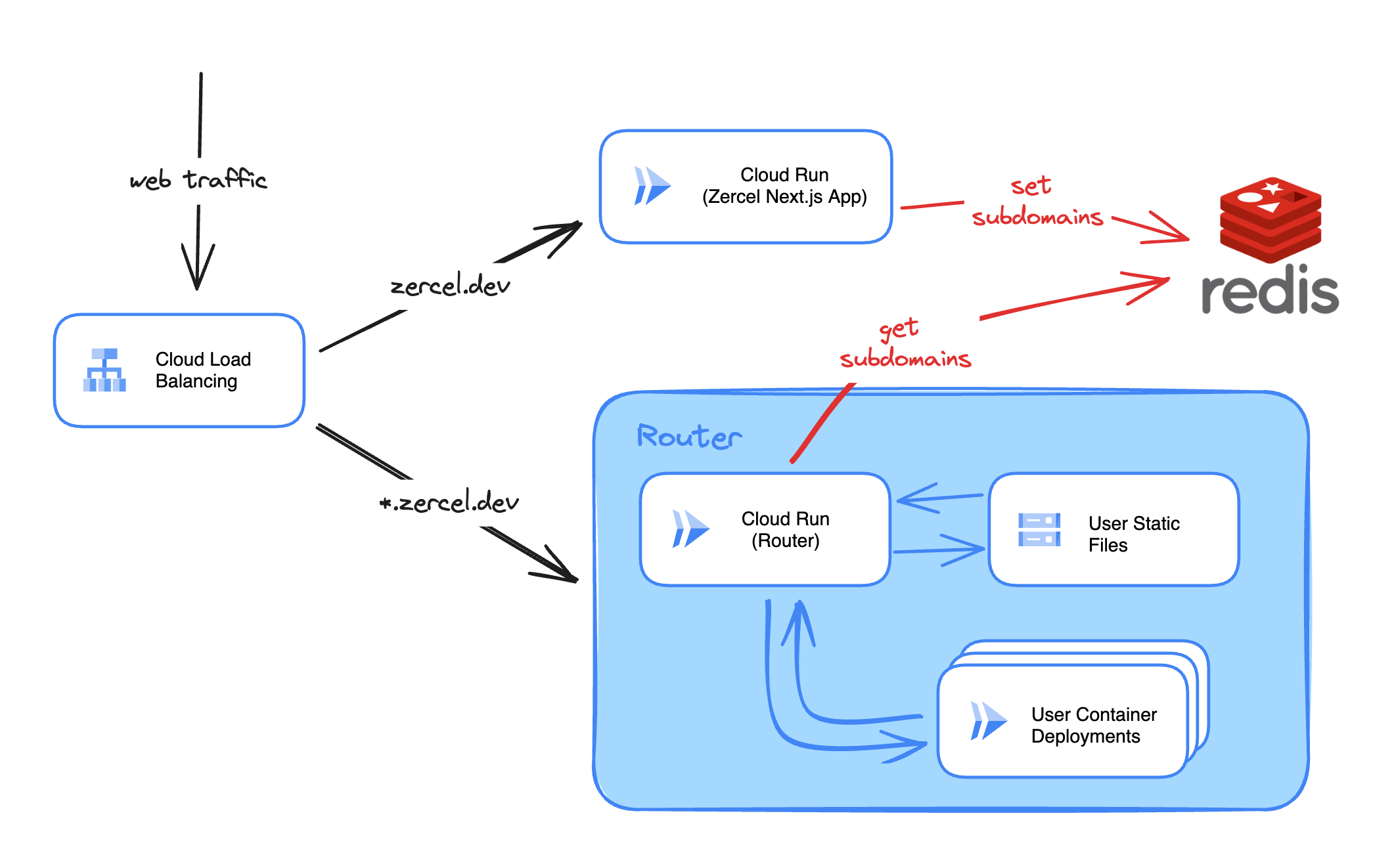

.dev domains are HTTPS-only, so I needed to make sure I served these files over HTTPS. I setup a GCP Load Balancer to accept all traffic from zercel.dev and *.zercel.dev and route it appropriately. Specifically,

- any traffic to

*.zercel.devgoes to the router - any traffic to

zercel.devgoes to the zercel deployed as a nextjs app in a cloud run instance

Since the router maps subdomains to commit SHAs, it requires a persistent, fast updating dictionary. So, I switched to using a persistent Redis cache, which allows my Next.js app to instantly update subdomain <-> SHA mappings as new builds were completed. GCP provides a managed Redis service called Cloud Memorystore, but it's quite expensive for my small dev project. Instead, I used the free tier of Upstash for my Redis instance.

To serve data over HTTPS, I also needed an SSL certificate that encrypts the traffic. Usually, SSL certificates are generated per-domain, but they take from a few minutes to a few hours to be provisioned on GCP. I needed a way to generate SSL certificates on the fly for any subdomain of zercel.dev.

This is actually a common pattern, and where wildcard SSL certificates are perfect. They allow me to generate one certificate for all subdomains of zercel.dev (i.e. *.zercel.dev). Unfortunately, GCP's Compute Platform doesn't support wildcard SSL certificates for their Load Balancer. I had to find a workaround. After bashing my head around GCP docs for a few hours, I stumbled upon their certificate maps and got something working.

In hindsight, I should have set up a Cloudflare proxy (that I was going to use later anyways as a tiered CDN) to handle SSL termination. It would have saved me a lot of time and effort.

Server sites

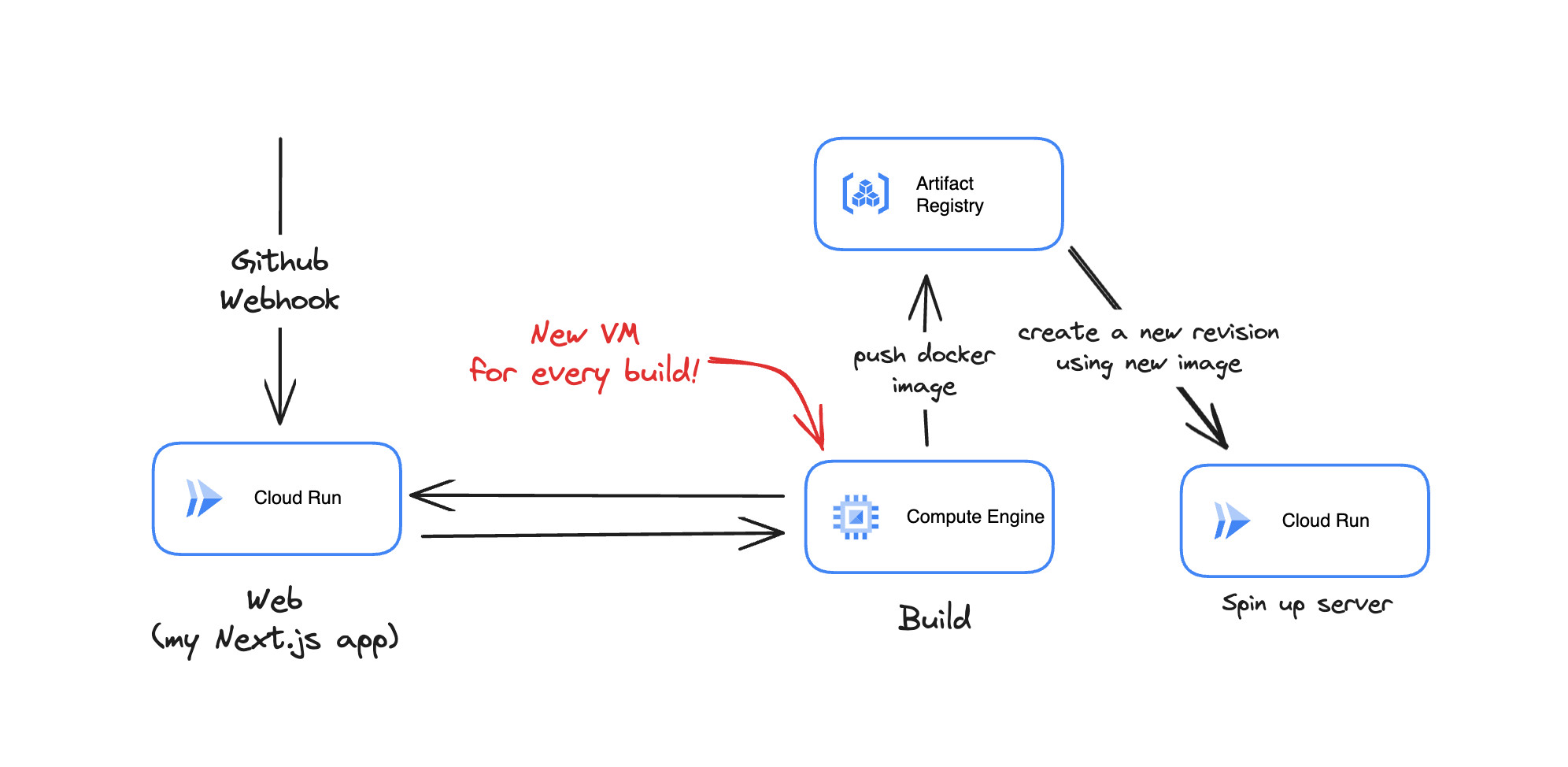

Next up: server-rendered sites. These were a whole new challenge. Unlike static sites, which can simply be built and uploaded to a bucket, server sites require running backend code, meaning I needed to package and deploy them as containers.

I thought I could follow the same approach as static sites—build everything inside a container and then deploy the output. But I quickly ran into a problem: you can’t build Docker images inside a Docker container. (Technically, you can with Kaniko but it was too complex for me to set up securely).

So I used VMs. I used GCP's Batch service to spin up a VM for each build. The VM uses a standardized Dockerfile I created to create an image for the server site. Once the image is built, I push it to GCP's Container Registry, use a webhoook to monitor the images, and auto-deploy new revisions to Cloud Run. This way, I can deploy server sites with the same ease as static sites.

Cloud Run allows me to set the minimum number of instances to 0 on a per-revision basis. This means I can have a revision for each build and only pay for the instances that are actually serving traffic. It also allows me to easily roll back to a previous version by changing the URL to the old revision, keeping the versioning as simple as changing the folder name for static sites.

With that in mind, all I had to do now was make my router handle both static and server sites. I updated the router to check if the subdomain entry had a URL or a SHA. If it had a URL, it would proxy the request to the Cloud Run instance. If it had a SHA, it would serve the static files from the bucket.

Are we Fluid?

One of my goals with this project was to replicate Vercel's fancy new Fluid Compute serverless model. Traditionally, serverless functions (like AWS Lambda) handle one request at a time per instance. Each new request typically spins up a separate instance, leading to increased cold start times and higher costs. Fluid Compute changes this by allowing a single instance to handle multiple concurrent requests, making it more efficient - especially for I/O-bound tasks like streaming responses from LLMs.

Cloud Run, by design, already operates in a similar fashion. When a request comes in, it spins up a containerized instance to handle it. Unlike traditional serverless functions, however, this instance behaves like a normal server and can handle multiple concurrent requests. It scales up and down dynamically based on demand, mirroring Fluid Compute’s behavior.

So, without doing anything extra, my Cloud Run-based setup naturally benefits from the same efficiency gains as Vercel's Fluid Compute. Zercel is already Fluid!

Flushing it out

-

GitHub: I setup a Github App to get permissions to listen to webhooks and deploy repos.

-

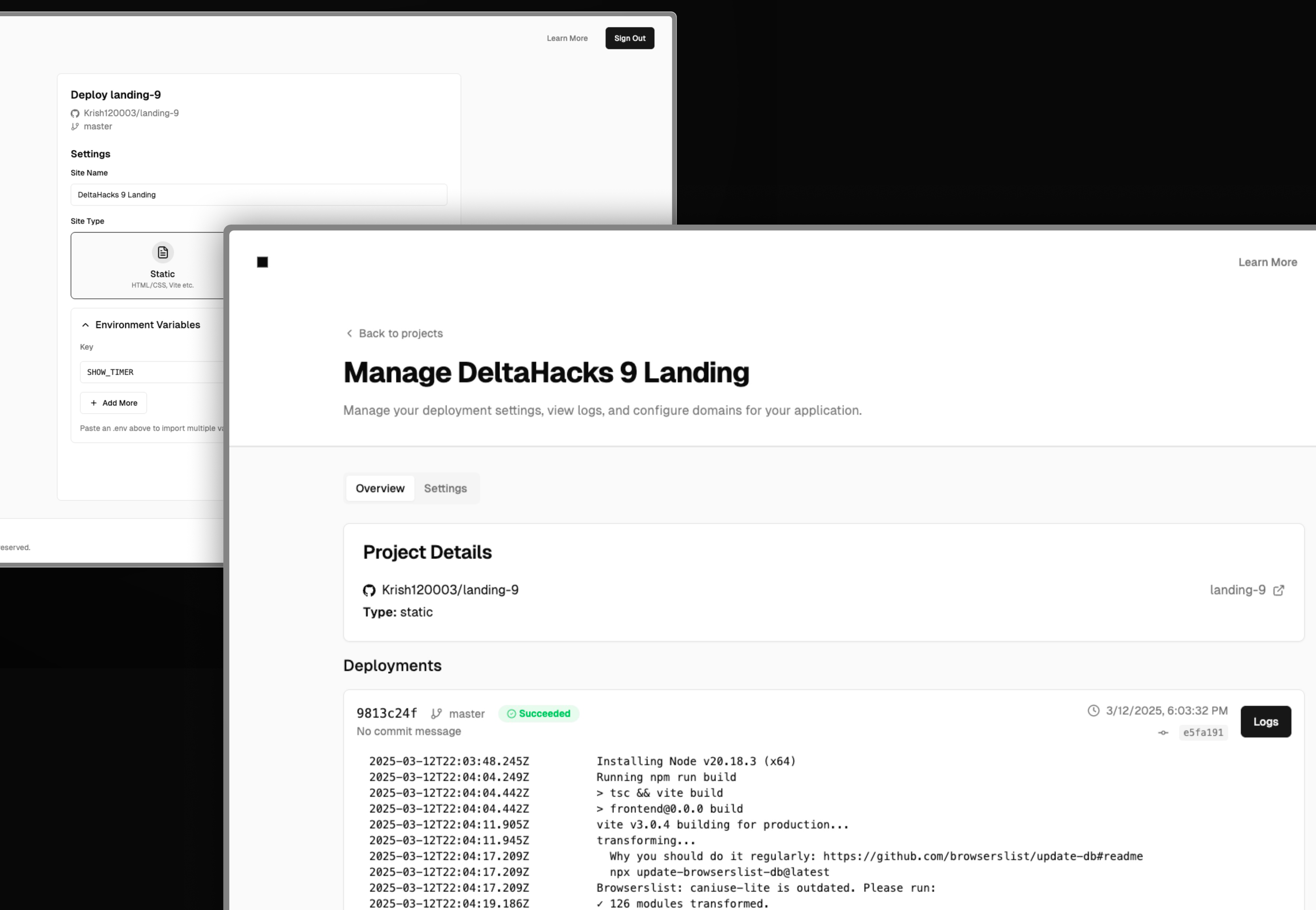

UI: I built a nice Next.js dashboard to manage deployments.

Zercel Deployment / Dashboard UI

Zercel Deployment / Dashboard UI -

Cloudflare: Added caching to serve files faster worldwide.

-

Optimized the router: Added in memory caching for burst traffic.

-

Environment Variables: Users can define env vars for static & server sites.

-

Logs: I added an integration into GCP's Logging service to stream logs to the dashboard.

This is far from finished

Even though I've now built something that can deploy both static and server-rendered sites/APIs, there's still a lot missing. Here are some things I figured out along the way:

-

Multi-region deployments: I could switch to an Anycast IP and make my router and all Cloud Run instances multi-region to ensure users are served from the closest region. This would be a simple configuration change, but I opted not to implement it due to cost concerns. (Can you guess I'm a broke university student?)

-

Version Skew Protection: This is a nice feature to prevent outdated clients from getting errors on updated servers. Vercel implementes this by expecting the client to send the deployment ID as either:

- a query param (

?dpl=<deployment-id>) - a header

x-vercel-deployment-id - a cookie

__vdpl

Since Zercel is designed to retain previous deployments, the router could simply check these conditions and route to the correct revision based on the cookie.

- a query param (

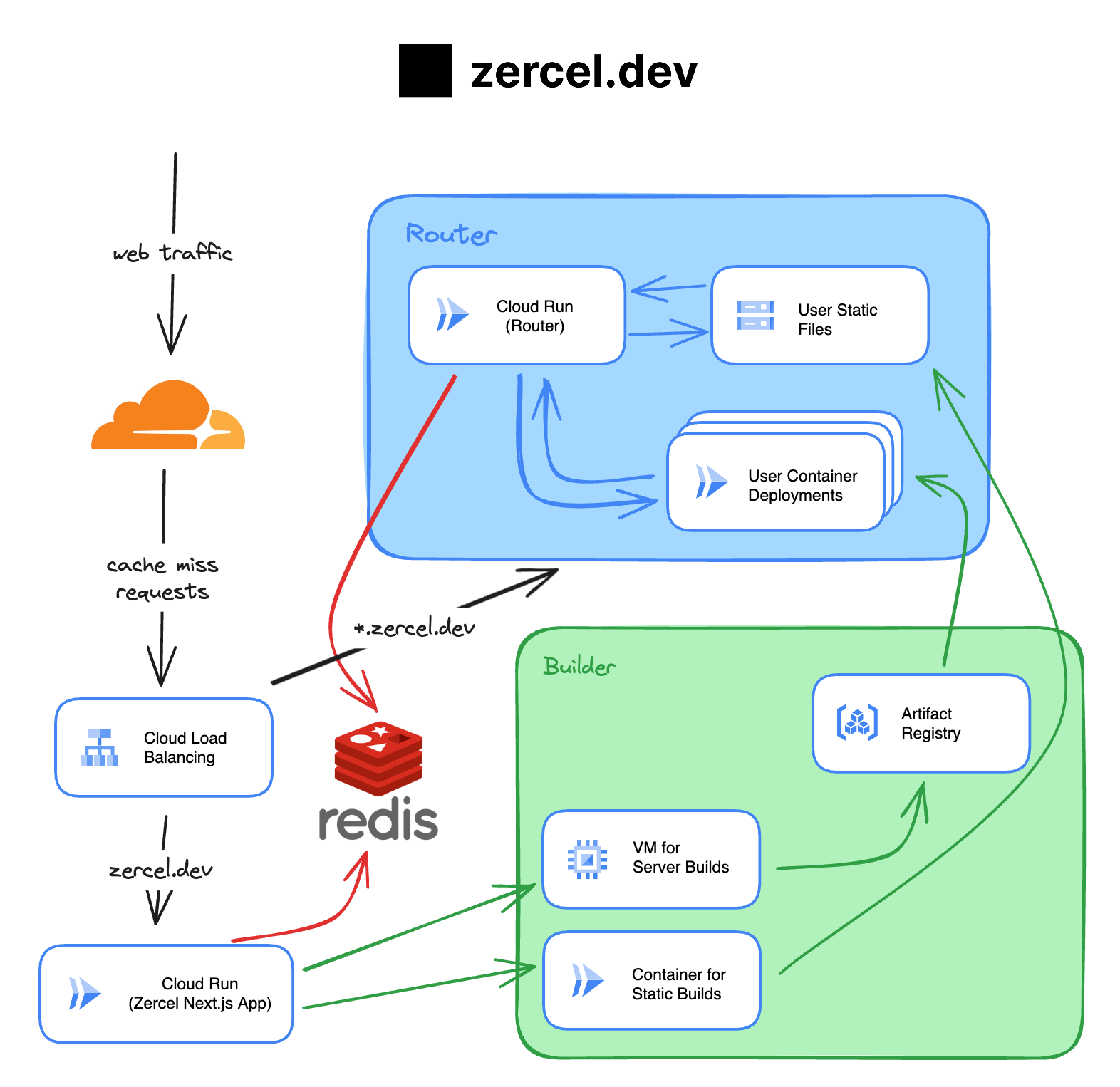

Here's an overview of what the final architecture looks like:

Final thoughts

Building Zercel has been one of the most fun and challenging projects I’ve worked on so far. It's given me a newfound respect for the folks at Vercel and the complexity of building such a platform.

I'm gonna host this project on zercel.dev for a few days, until I run out of money to pay for the GCP services. If you're interested in checking it out, feel free to deploy your site and let me know how it goes! You can also checkout the source at github.com/Krish120003/zercel.

If you have any questions or feedback, feel free to reach out to me on X @n0tkr1sh. I'd love to chat about this project or anything else you're working on.

Zercel out.